Generative AI has transitioned rapidly from experimental demos to mainstream deployments, but excitement often outpaces practical outcomes. While tools like large language models and image generators have achieved widespread awareness, adoption remains uneven and expectations can be misaligned. Surveys show that 95 percent of U.S. companies now use generative AI, yet more than 80 percent report no tangible impact on enterprise‑level EBIT from these investments, according to Bain & Company. IT teams should view early projects as learning opportunities rather than instant game‑changers.

Building a Solid Data Foundation

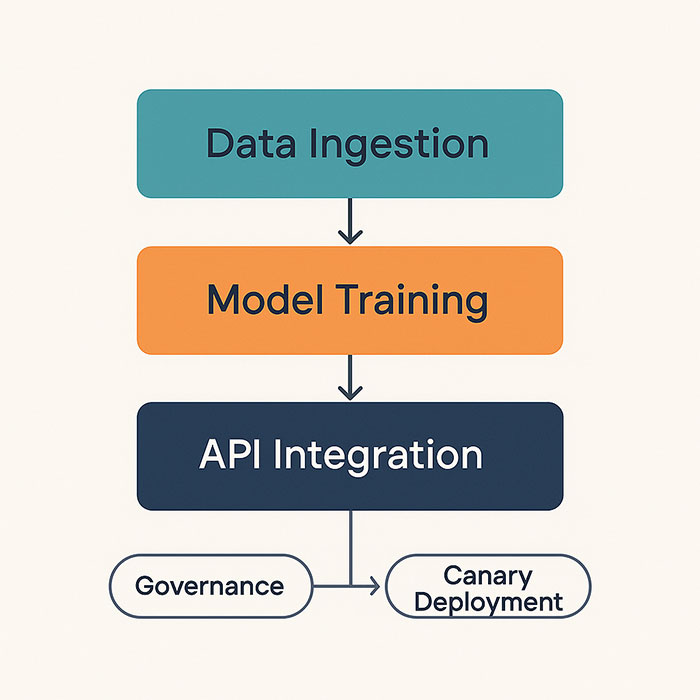

Robust data pipelines are the bedrock of any successful AI initiative. Generative models require high‑quality, well‑governed datasets to produce reliable results. Enterprises are investing heavily in infrastructure upgrades—such as custom silicon, cloud migrations, and systems to measure AI efficacy—to ensure that data flows smoothly from source to model. Quarterly reports from leading consultancies also highlight that data readiness and governance are among the top priorities for scaling GenAI projects beyond the pilot stage.

Integrating Generative AI with Existing Systems

Embedding generative capabilities into legacy applications and workflows calls for thoughtful integration. A pragmatic approach involves:

-

Exposing model endpoints via microservices or APIs, enabling modular adoption.

-

Leveraging no‑code/low‑code platforms for rapid prototyping, then refactoring to enterprise‑grade pipelines.

-

Implementing feature flags or canary deployments to roll out AI‑driven functionality safely.

OpenAI’s recent strategic guide for enterprise adoption outlines seven field‑tested strategies—such as aligning model choice to use case and iterating on prompt design—that help IT architects bridge the gap between research prototypes and production systems.

Ensuring Robust Governance and Security

As generative AI becomes more prevalent, governance frameworks must keep pace. Regulatory bodies and professional organizations are already mandating policies to safeguard privacy, mitigate bias, and maintain transparency. For example, California’s judicial council is preparing rules requiring all courts to adopt AI policies or ban public AI tools outright, emphasizing confidentiality, bias mitigation, and user disclosure, (Reuters). In parallel, enterprises are embedding audit trails, access controls, and human‑in‑the‑loop checkpoints to meet both internal risk standards and emerging regulations (Deloitte).

Upskilling and Organizational Readiness

Technology alone won’t guarantee success—people and processes matter just as much. Despite strong interest, only a small fraction of companies consider themselves AI-mature, with talent gaps in machine learning engineering, data science, and natural language processing (NLP) expertise. IT organizations should prioritize:

-

Formal training programs and certifications in AI fundamentals and MLOps.

-

Cross‑functional “AI champion” roles that bridge development, operations, and compliance teams.

-

Blameless post‑mortems to capture lessons learned and refine best practices.

By investing in skills development and clear ownership models, teams can reduce time-to-value and ensure sustainable AI operations.

Turning AI Potential into Operational Excellence

Generative AI projects succeed when they’re treated as continuous journeys rather than one‑off experiments. Start with a narrowly scoped pilot—such as automating routine ticket triage or generating draft documentation—then measure impact against clear service‑level objectives. Iterate on data quality, integration patterns, and governance controls, celebrating early wins and learning from missteps. Over time, this continuous feedback loop transforms AI from a “nice‑to‑have” novelty into a core capability that drives reliability, efficiency, and strategic differentiation across the enterprise.