Over the past two years, AI coding assistants have shifted from experimental tools to everyday necessities for many IT teams. GitHub Copilot, Amazon CodeWhisperer, Replit Ghostwriter, and ChatGPT’s Code Interpreter mode have exploded in popularity, offering autocomplete suggestions, full-function generation, bug explanations, and even automated documentation.

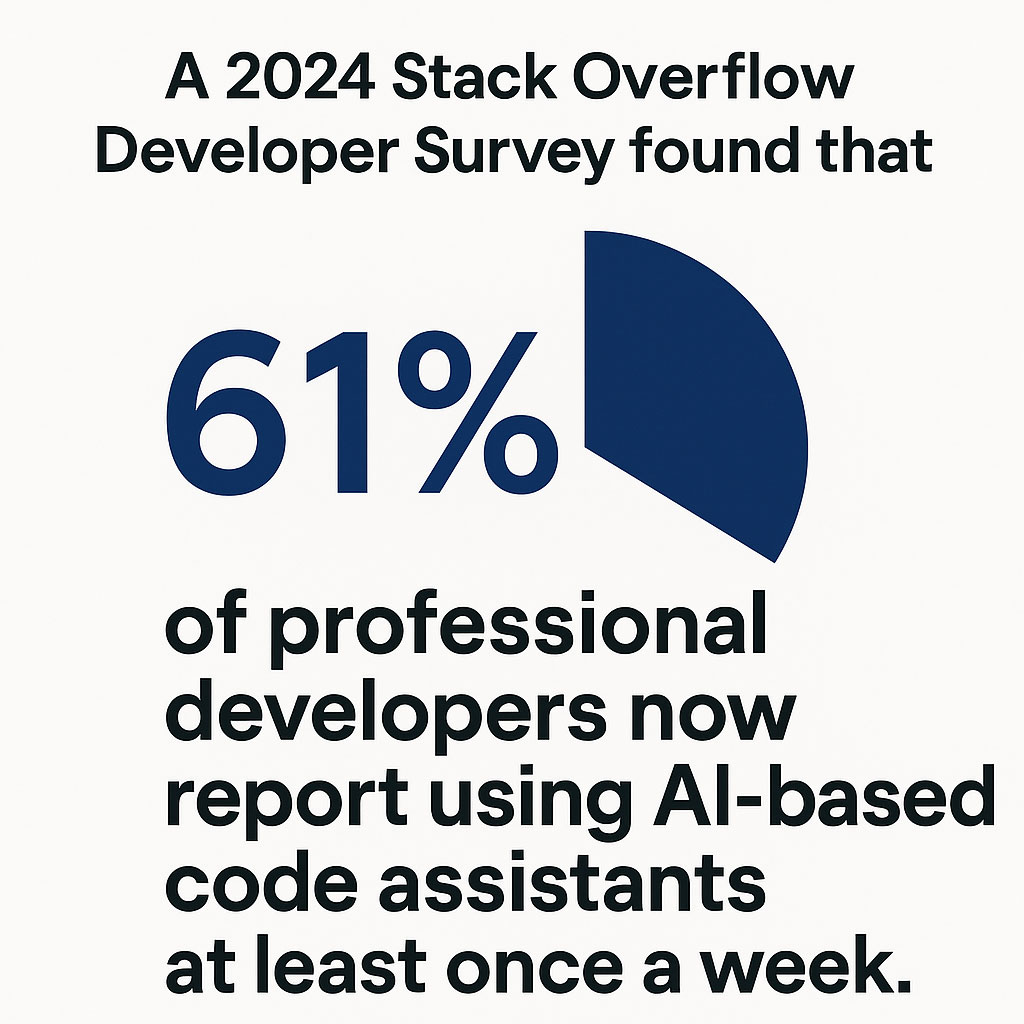

A 2024 Stack Overflow Developer Survey found that 61% of professional developers now report using AI-based code assistants at least once a week. Among junior developers, that number climbs to nearly 75%. The promises are tempting: faster delivery times, less “grunt work,” and even learning new coding patterns by example.

But with these benefits come serious risks.

Security experts have sounded alarms:

-

A Stanford study showed that code written with AI assistance was more likely to include security vulnerabilities than manually written code.

-

OpenAI’s Codex model (the engine behind GitHub Copilot) has, at times, suggested code snippets that mirror copyrighted material or insecure patterns.

-

The legal landscape is still murky — especially concerning who holds liability if AI-written code infringes copyrights or introduces a breach.

Leading firms are taking a cautious but strategic approach:

-

Red teams and blue teams now test AI-generated code for vulnerabilities before it hits production.

-

AI auditing tools like GitGuardian, SonarCloud, and Snyk are being integrated into CI/CD pipelines to catch flaws early.

-

“Human-in-the-loop” policies mandate that every AI-suggested code block must be peer-reviewed by a developer before acceptance.

What IT Leaders Are Advising:

-

Set Internal AI Usage Policies: Clarify when, how, and where AI can assist coding—and where manual work is non-negotiable.

-

Train for Awareness: Developers should understand AI’s limitations and recognize patterns of low-confidence outputs.

-

Prioritize Security Reviews: Assume that AI-written code is untrusted until thoroughly validated.

The near future will likely see a dual system: AI accelerates prototyping, scaffolding, and documentation tasks, while critical production logic stays firmly under human control.

Finding the Right Balance

AI coding tools are powerful collaborators, not replacements for professional judgment. The companies that find the right balance—leveraging speed without compromising security—will lead the next wave of software innovation.